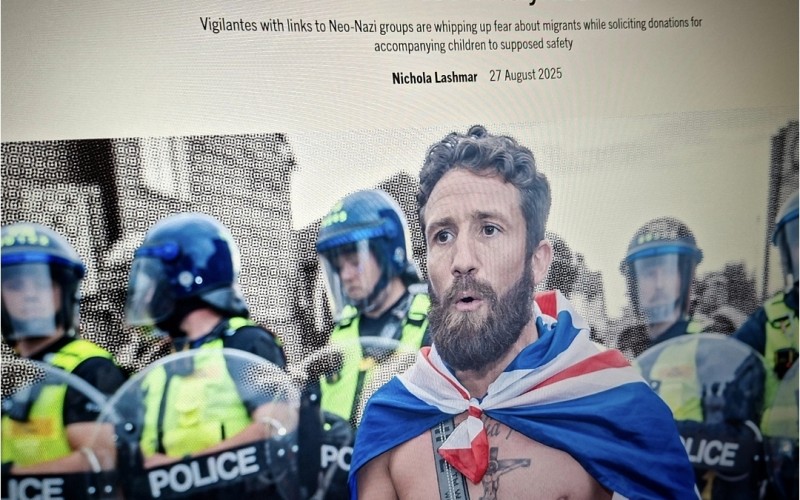

At the top end far-right activist Tommy Robinson charges £28 per minute for coaching through the slick app Minnect, where followers can get his advice and viewpoints on topics related to anti-Islam activism and political commentary. However, across the country other local patrol groups are posing as charities and soliciting donations for ‘equipment’ while operating without police recognition.

Adults who work with Young People News

TikTok has become a go-to source for health advice for millions of people. But when you search for treatments for cancer and autism, the vast majority of the videos first served to you feature claims about treatments that are not supported by science

Trade unions and online safety experts have urged MPs to investigate TikTok’s plans to make hundreds of jobs for UK-based content moderators redundant.

The video app company is planning 439 redundancies in its trust and safety team in London, leading to warnings that the jobs losses will have implications for online safety.

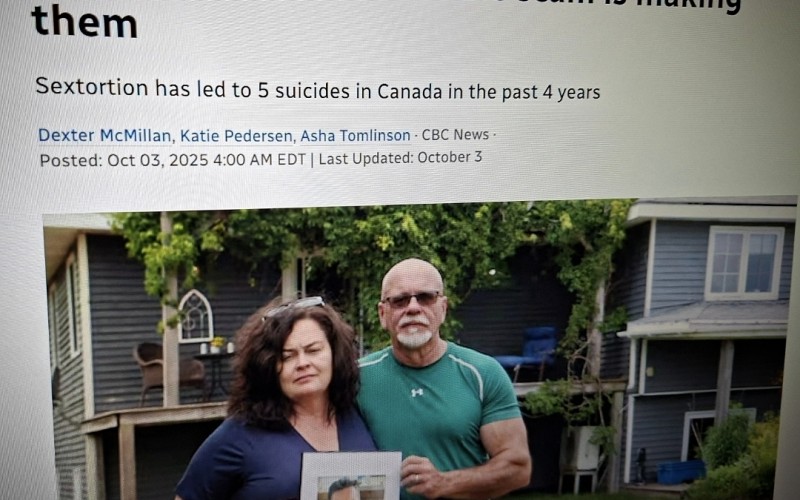

"I think this is the worst scam in the world," said Paul Raffile, a sextortion and cybercrime researcher based in Connecticut. "There is no other scam that involves targeting children, coercing them into a sexually compromising situation, exploiting them, blackmailing them. There's no other scams that I think even compare to this."

A new app offering to record your phone calls and pay you for the audio so it can sell the data to AI companies is, unbelievably, the No. 2 app in Apple’s U.S. App Store’s Social Networking section.

The app, Neon Mobile, pitches itself as a moneymaking tool offering “hundreds or even thousands of dollars per year” for access to your audio conversations.

In 2023/24, Department for Education data shows a record 11,614 suspensions were handed to pupils using apps like Instagram, TikTok and Twitter to bully their peers or share inappropriate content.

This marks an increase of over 75% since 2021.

Comments

make a comment